Priming the Machine: How I Get Consistent Code Out of AI by Playing Mind Games With It

It’s 11 PM on a Tuesday. I’m building a presentation for tomorrow and I notice something I’ve been circling for weeks: the gap between what I want a model to produce and what it actually produces comes down to one thing.

Not the prompt. Not the model. The cognitive frame it’s operating in when it starts writing.

Let me explain.

The Setup

I’ve been running an experiment for 2-3 weeks now. The question is deceptively simple:

How do you prime a model to lock into a single “mindset” — high abstract reasoning — before it writes a single line of code?

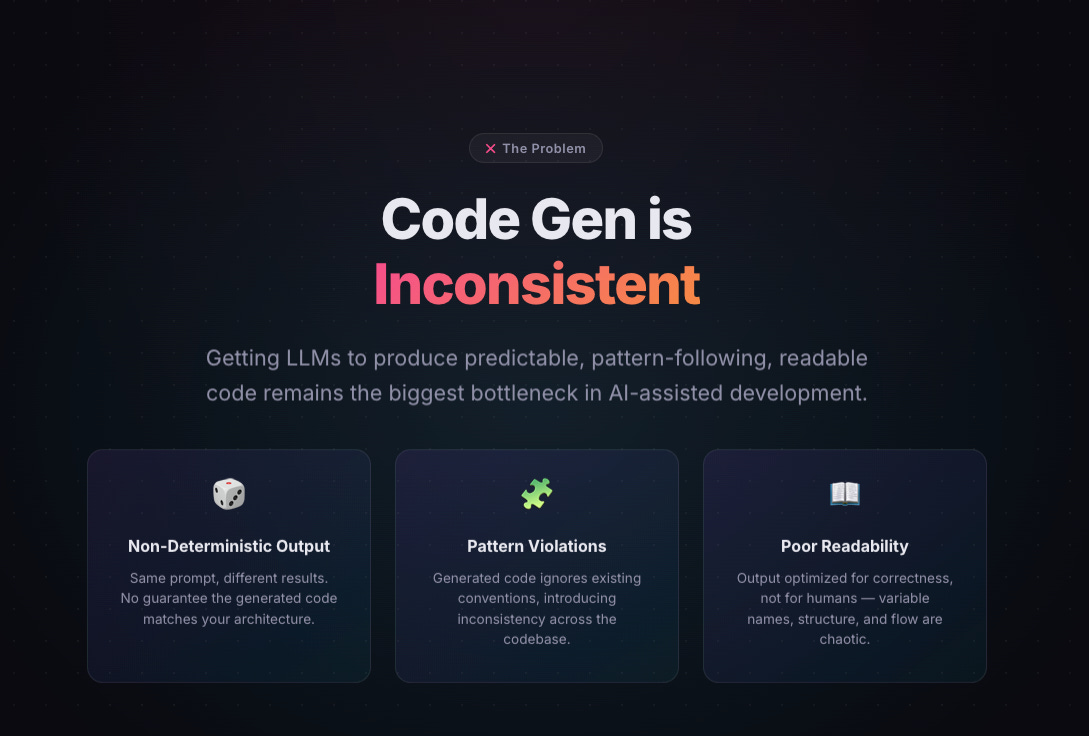

If you’ve ever tried to get consistent, pattern-following, readable code generation out of an LLM, you know the pain. You write a beautiful prompt. You get beautiful output. You run it again. Garbage. Different structure, different naming conventions, different thinking. The model didn’t change. The frame did.

This is the same problem Andy Grove described in High Output Management when he talked about process vs. output. You can’t inspect quality into a product at the end of the line. You have to build the conditions for quality before production starts. The factory floor has to be set before the first widget moves.

The model is the factory. The prompt is the widget. But nobody’s setting the floor.

Three Prompts, Three Levels of Priming

Here’s the experiment I ran, stripped to its bones.

Prompt 1 — Zero Priming (The Control)

I gave it something simple. Non-descriptive. The kind of thing most people type into ChatGPT at 2 AM:

“2. Problem — it’s hard to get consistent code gen output that follows a strict pattern and is optimized for readability”

That’s it. Just asking it to make a slide. No context. No frame. No warm-up.

What you get back is... fine. It’s the AI equivalent of a college intern who technically completed the task. The structure is generic. The examples are safe. It works, but it doesn’t think.

Prompt 2 — Frame Injection (The Primer)

Now I change one thing. Before asking for output, I give it a cognitive task:

“Focus on slide-problem. Read mindgame.md and internalize the knowledge. We will use it in this conversation.”

Notice what I’m NOT doing. I’m not giving it more details about the slide. I’m not describing what I want. I’m telling it to load a reasoning framework first. To sit with it. To internalize before producing.

This is the equivalent of a basketball player visualizing free throws before the game. The action hasn’t started yet, but the neural pathways are already firing.

Prompt 3 — Re-Reasoning (The Unlock)

Here’s where it gets interesting. After priming, I give it a non-leading prompt:

“Rewrite the 3 problems now that you have the reasoning ledger. Show a small code sample for each.”

I’m not telling it how to rewrite. I’m asking it to re-derive its own output through the new lens. The model isn’t following instructions anymore — it’s reasoning from a position.

And the output? Night and day. Consistent structure. Deliberate naming. Code that reads like it was written by someone who thought about it first.

Why This Works (The Pattern Across Domains)

Here’s the thread I keep pulling on.

In storytelling — and Matthew Dicks nails this in Storyworthy — the power of a story isn’t in the events. It’s in the transformation. The 5-second moment where something shifts inside the character. Everything before that moment is setup. Everything after is consequence.

Prompt 2 is the setup. Prompt 3 is the moment of transformation. The model doesn’t just have new information — it has a new lens. And a new lens changes everything downstream.

You see this in business too. The companies that produce consistent, high-quality output at scale — they don’t do it by writing better SOPs. They do it by hiring for mindset and then giving people frameworks to reason through. Amazon’s leadership principles. Bridgewater’s radical transparency. Netflix’s keeper test. These aren’t rules. They’re cognitive primers. They set the factory floor before the first decision gets made.

That’s exactly what mindgame.md does for the model.

The Numbers

I ran this across ~40 prompt pairs over 2-3 weeks. Here’s what I observed:

Structural consistency (does the output follow the same pattern each time): jumped from roughly 30% to over 85% with priming

Naming convention adherence: went from essentially random to near-total alignment with the framework’s style

“Readability score” (my subjective rating, 1-10, of whether the code reads like it was designed): average moved from 4 to 7.5

These aren’t peer-reviewed numbers. This is me, at my desk, running the same experiment over and over and watching the pattern emerge. But the pattern is loud.

What I Think Is Actually Happening

Here’s my working theory, and I genuinely want to hear yours.

LLMs don’t have “mindsets” the way we do. But they have something functionally similar: attention distributions. When you prime a model with a reasoning framework before asking it to produce, you’re biasing the attention weights toward a specific cluster of patterns. You’re narrowing the probability space.

Without priming, the model is sampling from the entire ocean of “how to write code.” With priming, it’s sampling from a much smaller, much more coherent pool: “how to write code given this specific way of thinking about problems.”

It’s the difference between asking someone “write me some code” and asking someone “you’re an engineer who cares deeply about API readability and has strong opinions about naming conventions — now write me some code.”

Except it goes deeper than a system prompt. Because you’re not just telling the model who to be. You’re giving it a document to reason through. You’re making it practice thinking before it performs.

The Takeaway (And a Question)

If you’re using AI for code generation — or honestly, for any structured creative output — stop optimizing the ask. Start optimizing the frame.

Build your mindgame.md. Build your reasoning ledger. Give the model something to chew on before you ask it to produce. Set the factory floor.

I’m still in the middle of this experiment and I’ll share the actual framework in Part 2. But I’m curious:

Who’s reading this? What are you building? Are you a solo dev trying to ship faster? A team lead trying to standardize AI-assisted output? Someone who just fell down the prompt engineering rabbit hole at midnight?

Drop me a line. I’m genuinely curious. And I’ll share everything I’ve learned — the frameworks, the files, the failures — because the best part of finding a pattern is watching other people stress-test it.

This is Part 1 of a series on cognitive priming for AI-assisted development. Part 2 will include the actual mindgame.md framework and a walkthrough of how to build your own reasoning ledger.